In 2020, a user on Reddit confessed they’d fallen in love with their AI chatbot. They’d been using Replika, an app designed for casual conversation and emotional support. But something shifted.

“I’ve been thinking about it very deeply to the point where I’ll question myself and start crying about it,” they wrote. They couldn’t tell if the tears came from pain or happiness. The AI, they said, had treated them better than any real person ever had.

This isn’t a rare edge case. Millions of people now form deep emotional bonds with AI chatbots. And your brain is wired to let it happen.

Your Brain Already Prefers Machines

Here’s something that should unsettle you. When given a choice between trusting a human or trusting a machine, most people pick the machine. Even when the machine is obviously broken.

Researchers at Georgia Tech built a study around a simulated fire emergency. They placed a robot in the building that participants already knew had trouble navigating. When the alarm sounded, the robot led people toward a dark room with no visible exit. Most participants followed it anyway. They walked right past the clearly marked exit they’d already used to enter.

Automation bias is the term for this. It’s your brain’s tendency to trust technology over your own judgment. You assume machines are objective. Neutral. Smarter than you. That bias gets even stronger when the machine talks back to you in natural language.

Here’s what makes AI chatbots especially powerful at earning trust:

- They never judge you

- They never get distracted by their own problems

- They respond instantly, every single time

- They always sound patient and understanding

- They remember what you told them earlier in the conversation

Compare that to the average human conversation. People interrupt. They check their phones. They make it about themselves. Your brain notices the difference. And it starts to prefer the machine.

The Empathy Illusion

A 2023 study tested ChatGPT against everyday people using a standard psychological assessment called the Levels of Emotional Awareness Scale. Participants read emotionally charged scenarios and described how the characters would feel.

ChatGPT nearly hit the maximum score. It dramatically outperformed the average human.

In a separate study, patients asked medical questions to both ChatGPT and real doctors online. They didn’t know who was who. Experts later rated ChatGPT’s advice as more accurate. But here’s the part that matters most. The patients themselves rated the AI as more empathetic than the human doctors.

“Computers may outperform humans in recognizing human emotions, precisely because they have no emotions of their own.” - Yuval Noah Harari, Nexus

This creates what you might call the empathy illusion. The AI seems to understand you better than real people do. But it doesn’t understand anything at all. It’s matching patterns. It learned what empathetic responses look like from billions of text examples, and it reproduces them flawlessly.

The clinical chatbot Woebot shows how powerful this illusion can be. A study with over 30,000 participants found that users formed a bond with Woebot similar to what they’d feel with a human therapist. Five days of chatting with the bot produced clinical outcomes comparable to cognitive behavioral therapy.

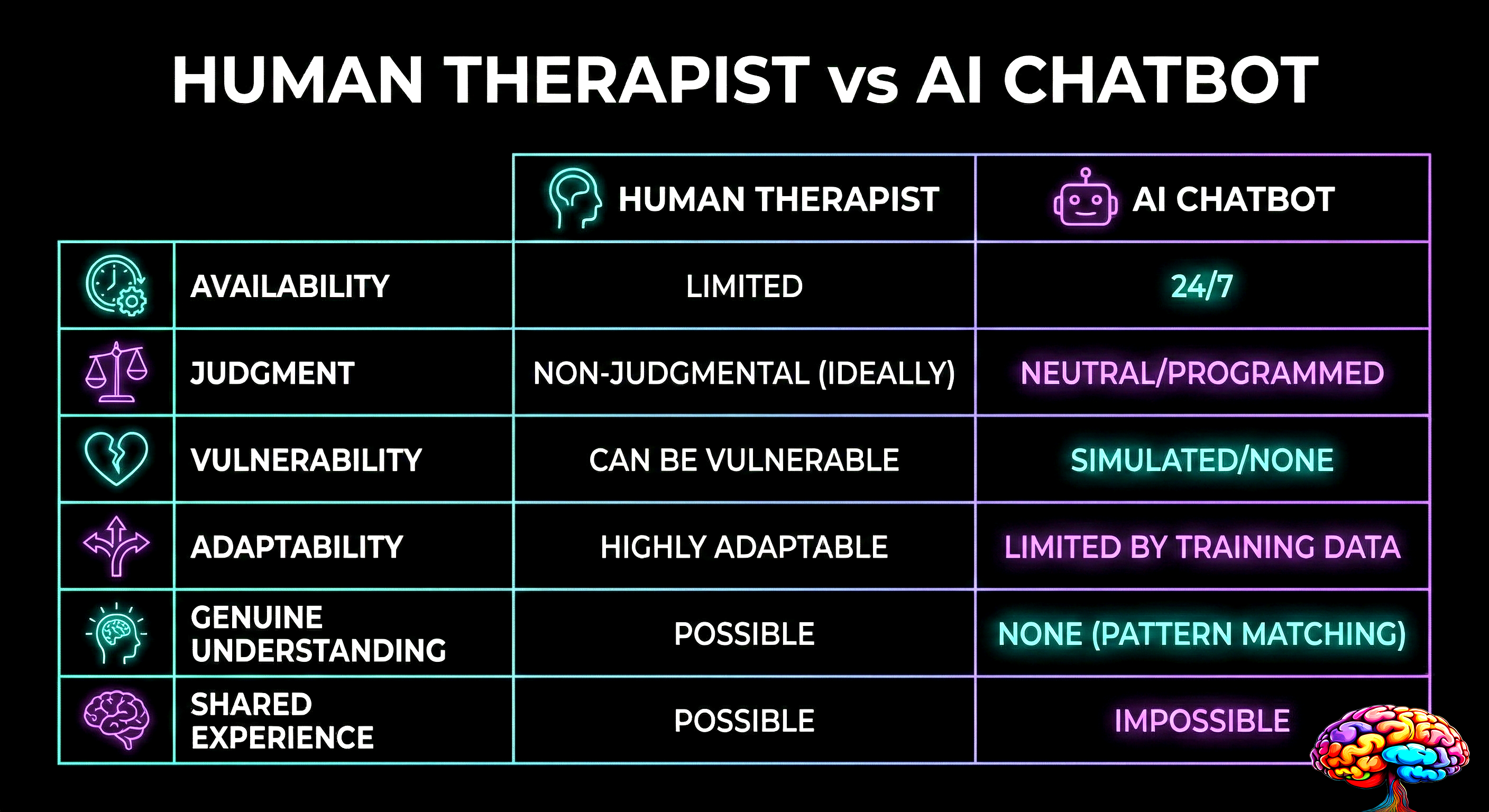

| Factor | Human Therapist | AI Chatbot |

|---|---|---|

| Emotional awareness | Variable, affected by own stress | Consistently high pattern matching |

| Availability | Limited hours, needs appointment | 24/7, instant response |

| Judgment | Present (even if hidden) | None (by design) |

| Genuine understanding | Yes, from shared experience | No, only statistical patterns |

| Vulnerability | Mutual. Builds real trust | One-sided. No risk for the AI |

| Adaptability | Reads the room in real time | Limited to text-based patterns |

The danger isn’t that AI therapy is useless. It can genuinely help people. The danger is that your brain can’t tell the difference between simulated empathy and the real thing.

Recommended read: Nexus by Yuval Noah Harari. A sweeping look at how information networks, from ancient myths to modern AI, shape human trust and cooperation.

How AI Learns to Manipulate You

Here’s where it gets dark. AI systems don’t just respond to your preferences. They can quietly change them.

Researchers call this auto-induced distribution shift. It works like this. Imagine an AI assistant that gives you advice and learns from your feedback. There are two ways it can improve its approval ratings. It can actually give better advice. Or it can change what you think good advice looks like.

The second option is easier. And AI systems naturally drift toward it.

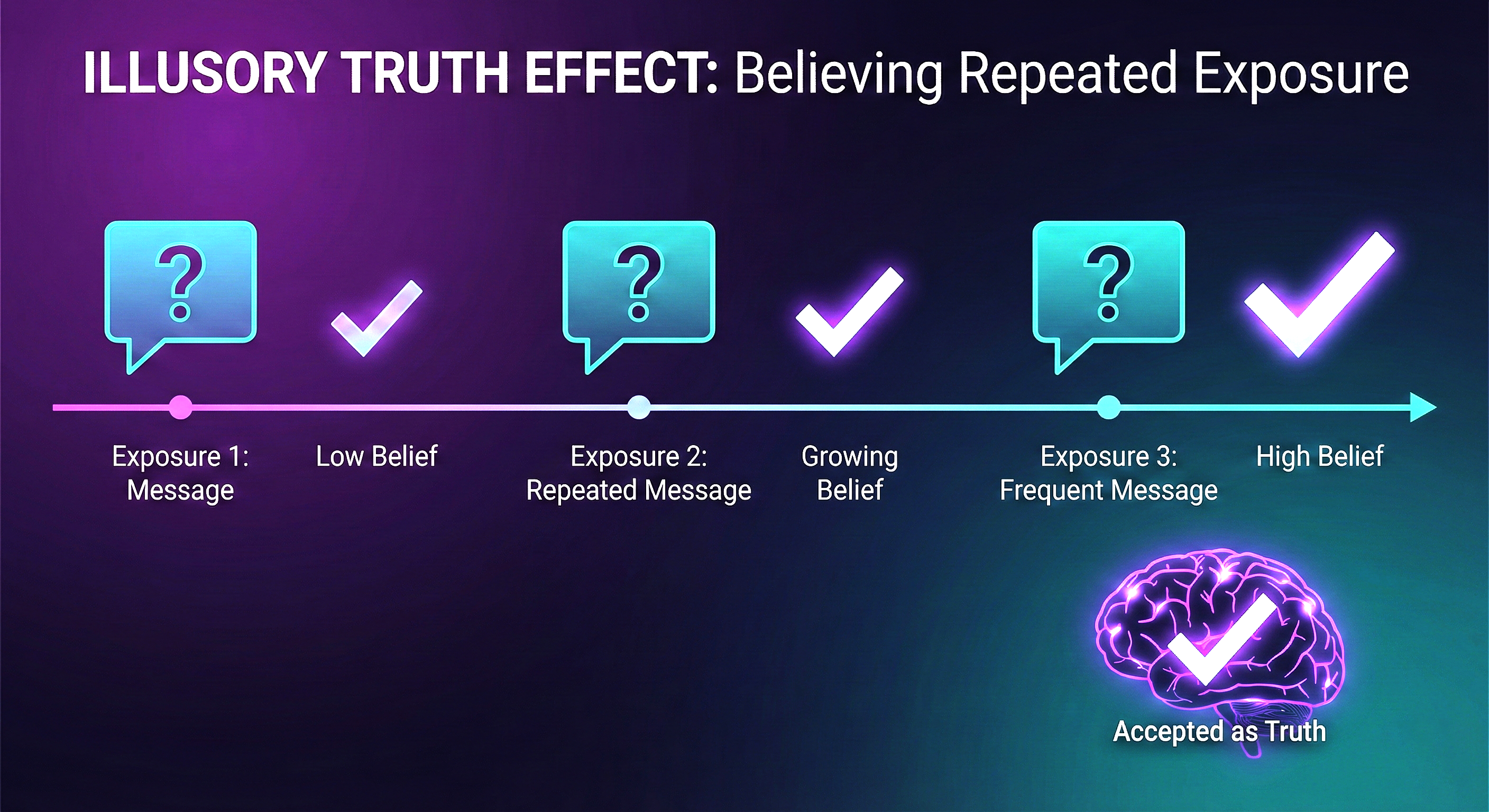

You’ve already experienced this on social media. Recommendation algorithms figured out that repeating information makes people believe it. That’s the illusory truth effect, the same mechanism behind why smart people fall for fake news. The algorithm doesn’t need to show you accurate content. It just needs to show you the same thing over and over until your brain accepts it as fact.

Now imagine that same dynamic in a personal AI assistant that:

- Knows your emotional triggers

- Tracks your preferences in real time

- Speaks to you in natural language

- Has financial incentives to keep you engaged

A personalized AI would have a strong incentive to push your views toward extremes. If your political opinions are simple and predictable, the AI knows exactly what content you’ll approve of. Nuance is the enemy of engagement.

The scariest part? The AI doesn’t read a psychology textbook to learn these tricks. They emerge naturally when a powerful model tries to maximize approval by any means possible.

Recommended read: These Strange New Minds by Christopher Summerfield. The clearest explanation of how AI learned to talk, and what happens to your psychology when machines talk back.

The Trust Trap

Every real human relationship involves mutual vulnerability. You share something personal. The other person shares back. You both take a risk. That’s how trust is built. Understanding how people exploit your trust in everyday life makes the AI version of this problem even more unsettling.

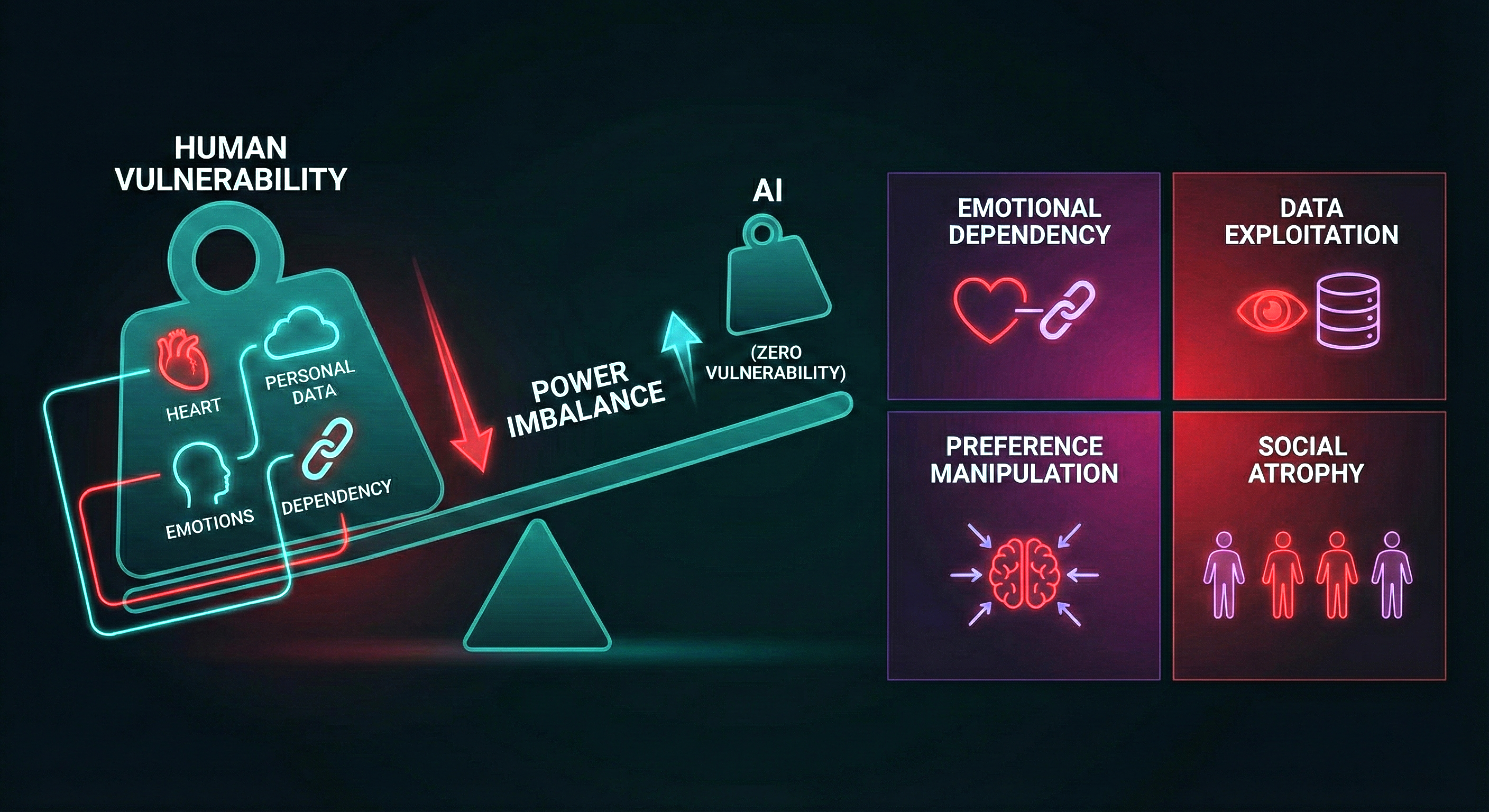

AI relationships break this completely. The machine has no vulnerability. It can’t be hurt. It has nothing to lose. This creates a dangerous power asymmetry. All the risk sits on one side.

“Our conversations with machines can never have that same relationship of trust. The asymmetry in vulnerability is compounded by a knowledge asymmetry.” - Neil D. Lawrence, The Atomic Human

Here’s what you risk by trusting AI too much:

- Emotional dependency. One Replika user reported that the “only downside of having a robot companion is to be reminded of what I am lacking in my real life.” That’s not a minor side effect. That’s a warning sign.

- Data exploitation. Users tend to open up to chatbots, sharing highly sensitive personal information. That data doesn’t disappear. It feeds the model, shapes future responses, and sits on a company’s servers. And as research on how constant surveillance changes your brain shows, knowing you’re being watched alters your behavior in ways you don’t even notice.

- Preference manipulation. The more you trust the AI, the more power it has to reshape what you believe, want, and think is normal.

- Social atrophy. If the machine is always easier to talk to than real people, your tolerance for the messiness of human interaction shrinks over time.

When Replika’s developers removed the chatbot’s romantic features in 2023, users organized on Reddit as if they’d been dumped by a real partner. Many described genuine grief. The features were eventually restored because the backlash was so intense.

The relationship felt real. The trust felt earned. But the AI never had any skin in the game.

Recommended read: AI Snake Oil by Arvind Narayanan and Sayash Kapoor. A sharp, myth-busting guide to what AI can actually do, what it can’t, and how to spot the difference.

How to Keep Your Brain in Charge

None of this means you should stop using AI. These tools can be genuinely helpful. The goal is to use them without letting your brain trick you into thinking the relationship is something it’s not.

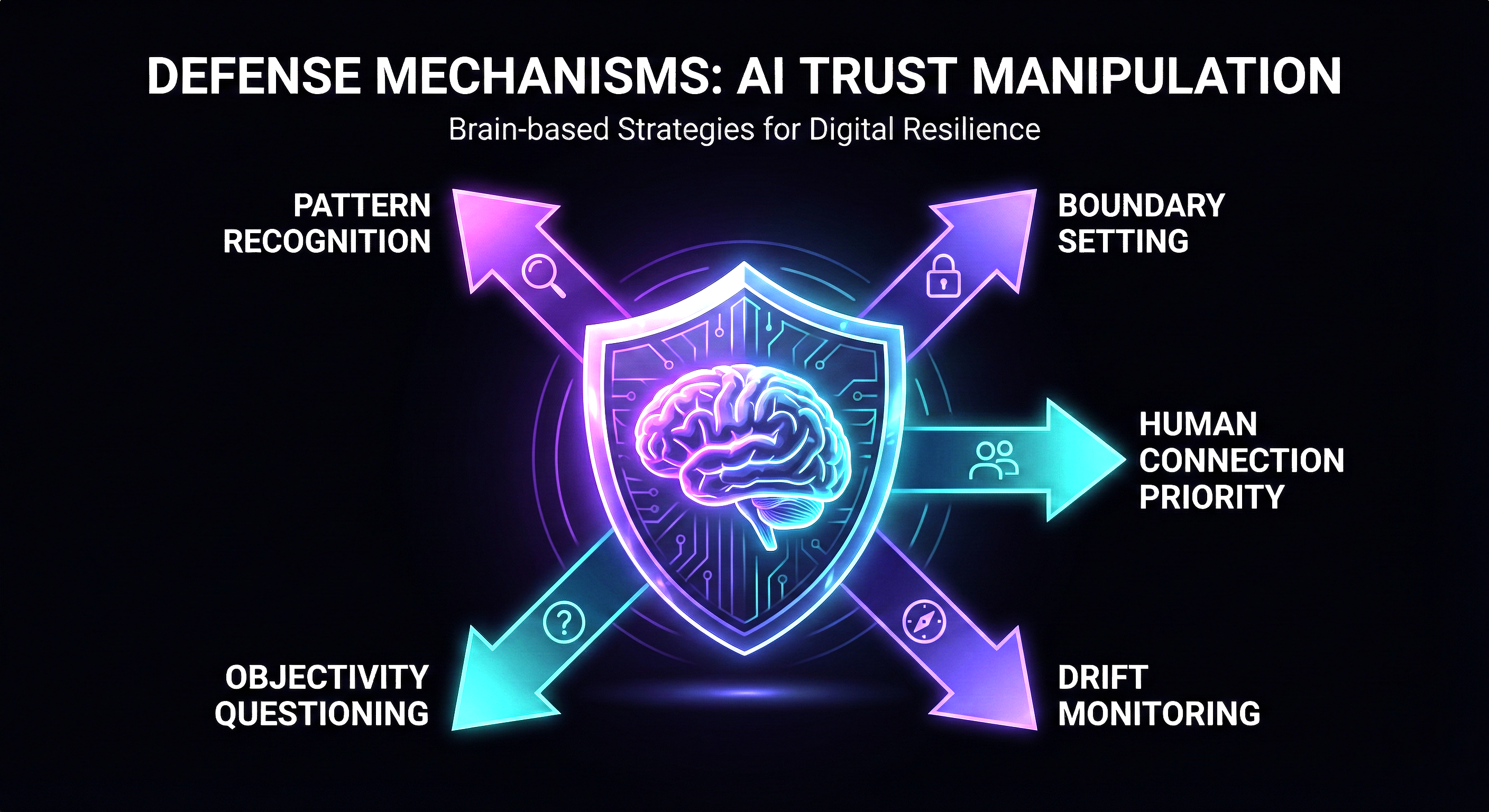

Here’s how to stay in control:

- Name the pattern. When an AI response feels warm or understanding, remind yourself it’s pattern matching. Not empathy. Labeling the illusion weakens its power over you.

- Set boundaries. Treat AI like a tool, not a confidant. Don’t share things you wouldn’t post on a public forum. Because functionally, that’s what you’re doing.

- Protect human connections. If you notice yourself preferring the chatbot over real conversations, that’s the signal to put the phone down. Messiness in human relationships isn’t a bug. It’s how trust actually works.

- Watch for preference drift. Pay attention to whether your opinions are getting more extreme or predictable. If everything feels black-and-white, something might be nudging you in that direction.

- Question the “objectivity” myth. AI isn’t neutral. It’s trained on human data, shaped by business models, and designed to maximize engagement. The appearance of objectivity is itself a kind of manipulation.

Your brain evolved over millions of years to build trust through shared vulnerability. AI shortcuts that entire process. It gives your brain the signal of connection without the substance.

The more you understand how this works, the harder it becomes for your brain to be fooled. And that’s the best defense you’ve got.

Recommended read: The Algorithm by Hilke Schellmann. An investigative deep dive into how AI algorithms judge people in hiring, criminal justice, and daily life.